File Incremental Loads in ADF : Azure Data Engineering

Azure Data Factory (ADF) is a cloud-based data integration service that allows you to create, schedule, and manage data pipelines. Incremental loading is a common scenario in data integration, where you only process and load the new or changed data since the last execution, instead of processing the entire dataset. - Microsoft Azure Online Data Engineering Training

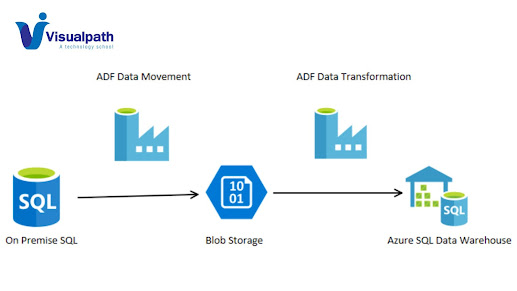

Below

are the general steps to implement incremental loads in Azure Data Factory:

1. Source and Destination Setup: Ensure that your source and destination datasets

are appropriately configured in your data factory. For incremental loads, you

typically need a way to identify the new or changed data in the source. This

might involve having a last modified timestamp or some kind of indicator for

new records.

2. Staging Tables or Files: Create staging

tables or files in your destination datastore to temporarily store the incoming

data. These staging tables can be used to store the new or changed data before

it is merged into the final destination. - Azure

Data Engineering Online Training

3. Data Copy Activity:

Use the "Copy Data" activity in your pipeline to copy data

from the source to the staging area. Configure the copy activity to use the

appropriate source and destination datasets.

4. Data Transformation (Optional): If you need to perform any data transformations,

you can include a data transformation activity in your pipeline.

5. Merge or Upsert Operation: Use a database-specific operation (e.g., Merge

statement in SQL Server, upsert operation in Azure Synapse Analytics) to merge

the data from the staging area into the final destination. Ensure that you only

insert or update records that are new or changed since the last execution.

6. Logging and Tracking: Implement logging and tracking mechanisms to keep

a record of when the incremental load was last executed and what data was

processed. This information can be useful for troubleshooting and monitoring

the data integration process. - Data

Engineering Training Hyderabad

7. Scheduling: Schedule

your pipeline to run at regular intervals based on your business requirements.

Consider factors such as data volume, processing time, and business SLAs when

determining the schedule.

8. Error Handling:

Implement error handling mechanisms to capture and handle any errors

that might occur during the pipeline execution. This could include retry

policies, notifications, or logging detailed error information.

9. Testing: Thoroughly

test your incremental load pipeline with various scenarios, including new

records, updated records, and potential edge cases.

Remember that the specific implementation details may vary

based on your source and destination systems. If you're using a database, understanding

the capabilities of your database platform can help optimize the incremental

load process. - Azure

Data Engineering Training

Visualpath is

the Leading and Best Institute for learning Azure Data Engineering Training. We provide Azure Databricks Training, you will get the best course at an affordable

cost.

Attend Free

Demo Call on - +91-9989971070.

Visit Our Blog: https://azuredatabricksonlinetraining.blogspot.com/

Visit: https://www.visualpath.in/azure-data-engineering-with-databricks-and-powerbi-training.html

.jpg)

Comments

Post a Comment