Get started analyzing with Spark | Azure Synapse Analytics

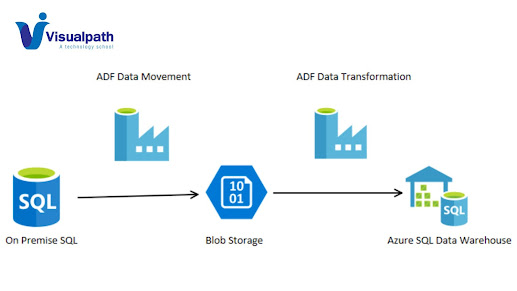

Azure Synapse Analytics (SQL Data Warehouse) is a cloud-based analytics service provided by Microsoft. It enables users to analyze large volumes of data using both on-demand and provisioned resources. This connector allows Spark to interact with data stored in Azure Synapse Analytics, making it easier to analyze and process large datasets. - Azure Data Engineering Online Training

Here are the general steps to use Spark with Azure Synapse

Analytics:

1. Set up your Azure Synapse Analytics workspace:

- Create an Azure Synapse Analytics workspace in the Azure portal.

- Set up the necessary databases and tables where your data will be

stored.

2. Install and configure Apache Spark:

- Ensure that you have Apache Spark installed on your cluster or

environment.

- Configure Spark to work with your Azure Synapse Analytics workspace.

3. Use the Synapse Spark connector:

- The Synapse Spark connector allows Spark to read and write data

to/from Azure Synapse Analytics.

- Include the connector in your Spark application by adding the

necessary dependencies.

4. Read and write data with Spark:

- Use Spark to read data from Azure Synapse Analytics tables into

DataFrames.

- Perform your data processing and analysis using Spark's capabilities.

- Write the results back to Azure Synapse Analytics. - Azure

Databricks Training

Here is an example of using the Synapse Spark connector in

Scala:

```scala

import org.apache.spark.sql.SparkSession

val spark =

SparkSession.builder.appName("SynapseSparkExample").getOrCreate()

// Define the Synapse connector

options

val options = Map(

"url" ->

"jdbc:sqlserver://<synapse-server-name>.database.windows.net:1433;database=<database-name>",

"dbtable" ->

"<schema-name>.<table-name>",

"user" -> "<username>",

"password" -> "<password>",

"driver" -> "com.microsoft.sqlserver.jdbc.SQLServerDriver"

- Azure

Data Engineering Training

)

// Read data from Azure Synapse

Analytics into a DataFrame

val synapseData =

spark.read.format("com.databricks.spark.sqldw").options(options).load()

// Perform Spark operations on

the data

// Write the results back to

Azure Synapse Analytics

synapseData.write.format("com.databricks.spark.sqldw").options(options).save()

```

Make sure to replace placeholders

such as `<synapse-server-name>`, `<database-name>`,

`<schema-name>`, `<table-name>`, `<username>`, and

`<password>` with your actual Synapse Analytics details.

Keep in mind that there may have

been updates or changes since my last knowledge update, so it's advisable to

check the latest documentation for Azure Synapse Analytics and the Synapse

Spark connector for updates or additional features. - Microsoft

Azure Online Data Engineering Training

Visualpath is the Leading and Best Institute for learning Azure Data Engineering Training. We provide Azure Databricks Training, you will get the best course at an affordable cost.

Attend Free Demo Call on - +91-9989971070.

Visit Our Blog: https://azuredatabricksonlinetraining.blogspot.com/

Visit: https://www.visualpath.in/azure-data-engineering-with-databricks-and-powerbi-training.html

.jpg)

Comments

Post a Comment